Multimedia Architecture

Introduction

This document has been reviewed for maemo 3.x.

This document tries to describe in a very conceptual manner how are the multimedia components organized both on the physical device and on the maemo SDK.

It's important to understand that even if applications work on the x86 target in the SDK some changes are needed in order to make them work on the actual device. That's because both architectures are different. You need to know them both in order to create multimedia applications.

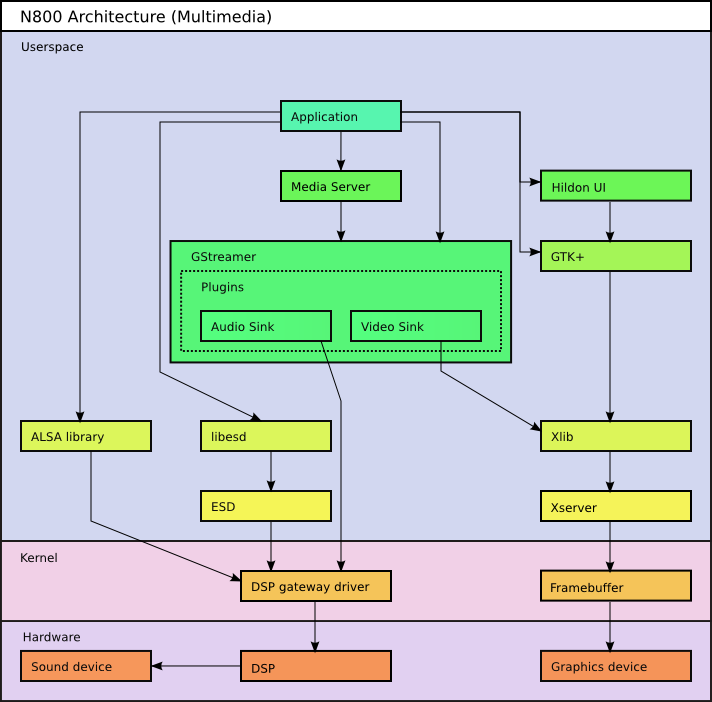

N800 architecture

The following diagram illustrates the multimedia architecture in a slightly simplified way:

Components:

- Application stands for the software which uses the multimedia capabilities of the device.

- Media Server is a daemon running in the device which is used by the platform to handle audio and video. Media Server is not included in the SDK, it exists only in the actual device where it provides media support for pre-installed software.

- GStreamer is a media processing framework. Developers can write new plugins to add support for new formats to existing applications. GStreamer gives precise control over the stream processing pipeline (for example, for adding effects, seeking and media type detection) on the application level.

- Plugins are GStreamer components, loadable libraries which provide elements to process audio and video streams.

- Audio and Video sinks are those GStreamer elements that send media data to the hardware (through kernel driver) for playback.

- libesd is a library for applications to access the EsounD daemon. Through libesd, you can have simple raw PCM audio playback and recording.

- ESD is a daemon which enables several simultaneous PCM audio streams to use the audio hardware (a software mixer).

- alsa-lib is the user-space library that applications use to access ALSA functionality. Through alsa-lib, you can have simple raw PCM audio playback and recording.

- ALSA is the modern Linux sound driver system.

- DSP is a kernel driver between userspace code and hardware DSP.

- Hildon UI is a graphical user interface designed for use on small mobile devices. It is built on top of GTK+.

- The GIMP Toolkit GTK+ is a multi-platform toolkit for creating graphical user interfaces. Applications can use GTK+ directly in addition to using it through Hildon UI.

- Xlib is a low-level library for displaying graphical elements.

- Xserver is the part of the platform which handles drawing graphics on the screen. X server in maemo is optimized for embedded usage and the specific hardware platform.

- Framebuffer is a memory area where displayable data is written.

Audio

The N800 does not contain a separate audio card. The audio streams are forwarded to DSP which handles the mixing between streams. In addition to basic PCM audio stream, DSP can also receive encoded audio streams, such as MP3, AMR and AAC. Using the DSP saves valuable computing resources of the main processor and increases battery lifetime.

Table 1. Supported audio codecs

| Format | Decoded with | Description | GStreamer Element |

|---|---|---|---|

| PCM | N/A | Raw PCM audio | dsppcmsink, dsppcmsrc |

| MP2 | DSP | MPEG-1 audio layer-2 | dspmp3sink |

| MP3 | DSP | MPEG-1 audio layer-3 | dspmp3sink |

| AAC | DSP | Advanced Audio Coding, only LC and LTP profiles supported | dspaacsink |

| AMR-NB | DSP | Adaptive Multi-Rate narrowband | dspamrsink |

| AMR-WB | DSP | Adaptive Multi-Rate wideband | dspamrsink |

| IMA ADPCM | CPU | Adaptive Differential Pulse Code Modulation | adpcmdec |

| G.711 a-law | DSP | ITU-T standard for audio companding | dsppcmsink, dsppcmsrc |

| G.711 mu-law | DSP | ITU-T standard for audio companding | dsppcmsink, dsppcmsrc |

| G.729 (OSSO 1.0) | DSP | ITU-T standard for speech | |

| RA 8,9,10 | CPU | RealAudio 8, 9, 10 (Through Helix) | |

| WMA | CPU | Windows Media Audio. | fluwmadec |

| iLBC | DSP | internet Low Bit Rate Codec | dspilbcsink, dspilbcsrc |

If you are writing a multimedia application and want it to either record or play audio, you have three options: your application can use the GStreamer framework, the ALSA API or the EsounD daemon. In general you should use GStreamer.

Video

Beside audio, the DSP can also handle some encoded video formats while others are decoded with the main CPU. If you are writing a multimedia application and want it to handle video streams, do it by using the GStreamer framework.

Table 2. Supported video codecs

| Video codec | Decoded with | Description | GStreamer Element |

|---|---|---|---|

| MPEG | CPU | MPEG-1 video | ffdec_mpegvideo |

| MPEG4 | CPU | MPEG-4 video | hantro4100dec, hantro4200enc |

| H263 | CPU | H.263 | hantro4100dec, hantro4200enc |

| RV 8,9,10 | CPU | RealVideo 8,9,10 (Through Helix) |

Containers

Muxing and demuxing container formats is always done on the main CPU.

Table 3. Supported containers

| Container | Video codecs | Audio codecs | Description | GStreamer Element |

|---|---|---|---|---|

| MPG | MPEG1 | MP2 | MPEG-1 container format | mpegdemux |

| AVI | MPEG4, H263 | MP3 | Audio Video Interleave container format | avidemux |

| 3GP | MPEG4, H263 | AAC, AMR-NB, AMR-WB | 3GP container format | demux3gp |

| RM | RV 8,9,10 | RA 8,9,10 | RealMedia container | |

| WAV | - | MP3, PCM | WAV container | wavparse |

| ASF | - | WMA | Advanced Streaming Format container | fluasfdemux |

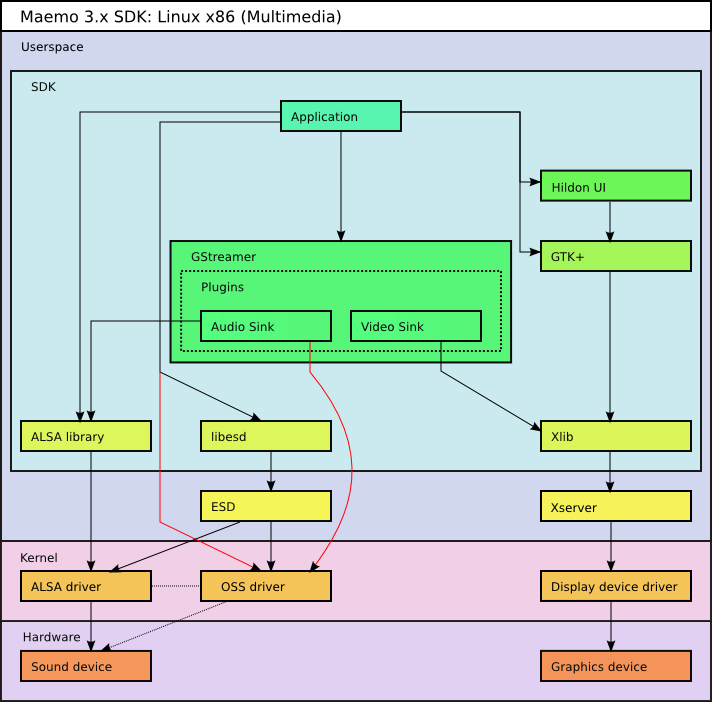

SDK architecture (X86 target)

The SDK is different from the physical device. This section describes its architecture and how it differs from the physical device.

Since the x86 SDK does not have a DSP nor any way to simulate it, all formats/streams are decoded using the main CPU.

X server is the graphical windowing system running on the workstation. Usually Xnest or Xephyr, which then communicates to XFree86 or XOrg.

Audio

GNU/Linux basically contains two different low-level interfaces to handle sound streams, OSS and ALSA API.

OSS does not support multiple simultaneous sound sources, therefore it is not possible to use EsounD daemon and generate audio with GStreamer at the same time.

GStreamer

GStreamer is a framework that allows constructing graphs of media-handling components, ranging from simple audio playback to complex audio (mixing) and video (non-linear editing) processing. Applications can benefit of advances in codec and filter technology transparently. Developers can add new codecs and filters by writing plugins. For more information, see the GStreamer's site.

N800 uses GStreamer version 0.10.X plus some device specific elements (for accessing the DSP).

For more information about GStreamer, see its documentation.

EsounD daemon

For more information about EsounD, download the source tarball from ftp://ftp.gnome.org/pub/gnome/sources/esound/0.2/. The currently used version is 0.2.35.

From the application's point of view, the EsounD interface is identical in both the target platform and the SDK.

ALSA

For more information about ALSA, see its documentation at: http://www.alsa-project.org/main/index.php/Documentation.

From the application's point of view, the alsa-lib interface is identical in both the target platform and the SDK.

Improve this page